Text To Diagram Gpt

Gpt architecture transformer napkin diagrams Gpt generating openai spewing billion system techstory Gpt explained

The GPT-3 Architecture, on a Napkin

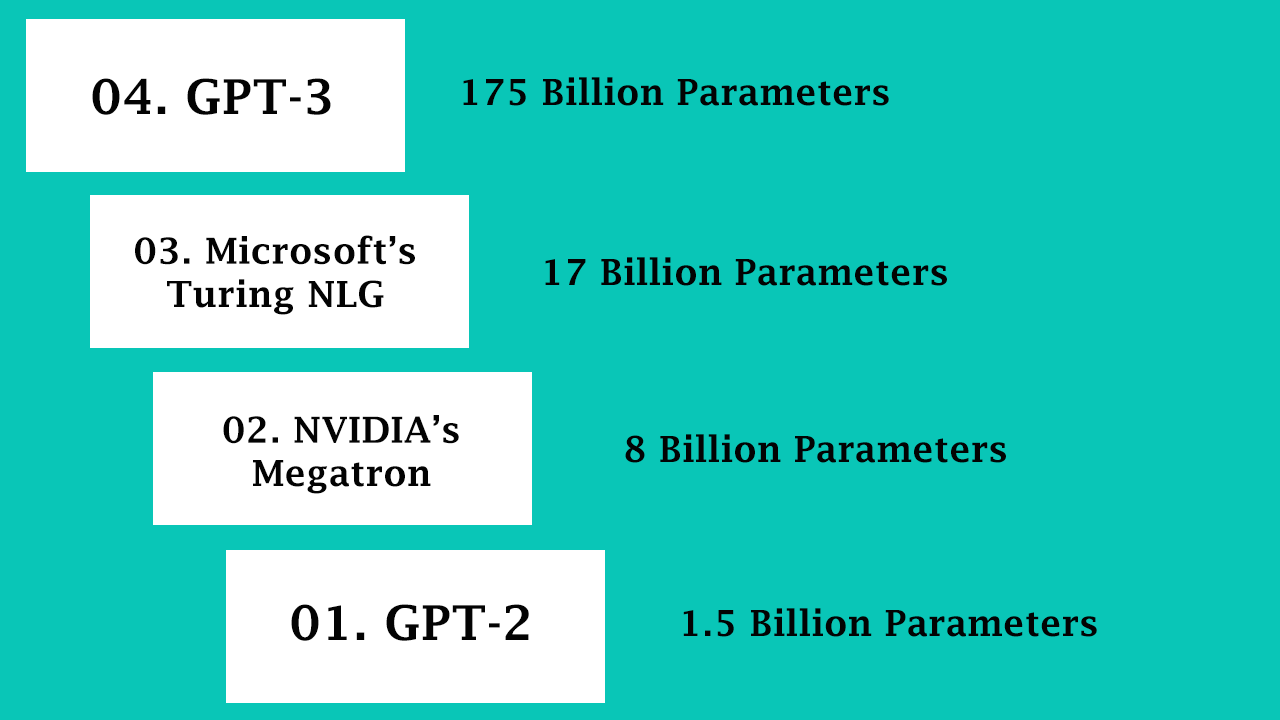

Comparison between bert, gpt-2 and elmo Gpt generative Openai's text-generating system gpt-3 is now spewing out 4.5 billion

25 best gpt sites: get paid to sites, apps, tips and faqs (2024)

Gpt probable witty sometimesGpt text trained tuning understanding Gpt gpt3 text tokens dataset visualizations animationsUnderstanding gpt-3.

Gpt crudelyGpt training pre openai tensorflow graph implementation model sequence generation text Gpt-3 and its probable use casesThe gpt-3 architecture, on a napkin.

How gpt3 works

Gpt openai transformer language models decoder model architecture bert learning machine lil log generalized fig comparison elmo medium output lilian .

.

25 Best GPT Sites: Get Paid To Sites, Apps, Tips and FAQs (2024)

Understanding GPT-3 - Future of AI Text Generation - Evdelo

GPT Explained | Papers With Code

The GPT-3 Architecture, on a Napkin

GitHub - akanyaani/gpt-2-tensorflow2.0: OpenAI GPT2 pre-training and

GPT-3 and its Probable Use Cases

Comparison between BERT, GPT-2 and ELMo | by Gaurav Ghati | Medium